I have been attempting to capture the process or to be more accurate the heuristics of how I analyse security architectures. This was originally driven by the time it took me to document my conclusions and the lack of any particularly well-suited tooling but has increasingly become an attempt to communicate the method to other security architects. I also have a sneaking suspicion that a useful chunk of the process could be automated.

Due to the scale and complexity of many of the systems I have worked with a large part of the process has been to decompose a system and measure and characterise it’s components. This allows me to identify high risk areas of the system to focus my efforts.

Commonly the nature of the systems and solutions I have built or analysed has been a heavy reliance on re-use of open source off-the-shelf (OSOTS) or commercial off-the-shelf (COTS) packages and components combined with a custom user interface, a minimal amount of custom middleware glue code and business rules and a lot of configuration. As a result much of the excellent work on software security has provided a series of tools and approaches that are useful when considering the glue code and business rules but tend only to cover a small part of the overall system.

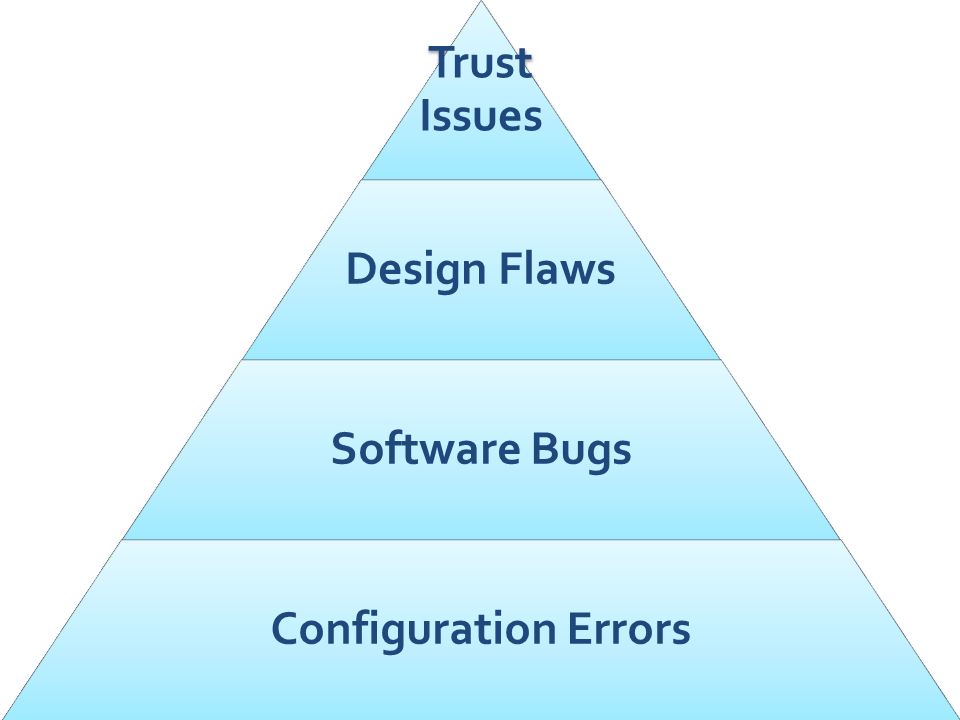

While concern over design flaws, especially around business logic and business rules, is a key concern it is clear that most systems currently are compromised through configuration errors or software bugs at the technical end or if you’re unlucky enough to be facing an APT-style adversary though trust issues at the user end.

Most of those configuration errors or software bugs are going to be present in the OSOTS or COTS packages as they not only make up the majority of the total system codebase but also most of the opportunity for gaps and errors through the process of their integration. These OSOTS/COTS packages are either vast codebases (requiring much more effort to assure than the projects integrating them are able to support) or are black boxes where the code cannot be inspected even in an automated manner.

This is a problem as without spending the extra money on an independently assured product or being able to inspect the code then it is necessary to fall back to the experience of the security architect in having deployed the component before in order to understand which components will require focused analysis and relying on security testing (vulnerability and penetration testing) to pick up where that heuristic fails.

What is visible when you’re dealing with black boxes is the surface of the box, for security purposes the attack surface. Michael Howard did some great work while he was at Microsoft explaining and promoting the concept of attack surface measurement. He also produced a more formal academic work with Jeanette Wing and John Pincus on an attack surface metric called the Relative Attack Surface Quotient (RASQ).

Following the work on RASQ Jeanette Wing then collaborated with Pratyusa Manadhata developing the detail and evidence for the utility of the attack surface metrics. They developed a model where an attacker uses a Channel to invoke a Method to send or receive a Data Item. They found a positive correlation (Not causation obviously) between the severity of discovered vulnerabilities reported in Microsoft security bulletins and the following characteristics of the attack surface:

- Method Privilege

- Method Access Rights

- Channel Protocol

- Channel Access Rights

- Data Item Type

- Data Item Access Rights

It surprises me that these findings haven’t been lauded more in the security community, they identified a basis for estimating the severity of future undiscovered vulnerabilities (The Rumsfeldian known unknowns of IT security) based on the measurable characteristics of the attack surface of a system. This doesn’t describe the probability or uncertainty of the exploitation of a vulnerability but does allow us to compare attack surfaces and identify the relative future severity of vulnerabilities we will have to deal with. Conversely this gives us a series of indicators within our control to influence that may allow us to reduce the severity of future vulnerabilities in key systems and components.

In my next post I will talk about how I decompose and model a system to investigate the attack surface metrics across the subsystems and components.